Tighter AI requirements call for new safety and security assessment tools

The pioneering European AI Act is casting a long shadow over AI-based systems and their use cases, drawing increasing attention to safety and security. The legislation also lays the groundwork for tomorrow’s AI certification programs. But you can’t certify without first evaluating. Increasingly pervasive AI systems handle sensitive data and perform sometimes-mission-critical tasks in a wide range of environments. The adversarial landscape is just as broad, and comprehensively assessing it is a difficult but crucial task. For machine learning models and, especially, deep neural networks (DNNs), the attack surface is particularly complex. These systems are, in effect, mathematical abstractions (with their many theoretical flaws) physically implemented in an environment that includes software and hardware.

An IoT use case: deep neural networks at the Edge

CEA-Leti investigated the often-overlooked physical vulnerabilities inherent to common internet of things (IoT) devices: deep neural network models on 32-bit microcontrollers. Since internal model parameters are stored locally in device memory, they make excellent targets for attacks that seek to manipulate these parameters to reverse-engineer the models or alter their behavior. We demonstrated the impact of Bit-Flip Attacks (BFAs) on AI models: just a handful of bit-flips could significantly degrade the performance of convolutional neural network models, raising serious security and evaluation concerns.

Laying the groundwork for robust AI certifications

Until now, most research has focused on DRAM. We have demonstrated the relevance and effectiveness of using bit-sets (instead of bit-flips) for a BFA-like attack, to extract confidential information from a protected black-box model. By analyzing the model’s output decisions with and without faults to discover parameter values, attackers can then use them in reverse-engineering attacks. Our research charts a course toward robust evaluation protocols for embedded AI models on Cortex-M platforms with Flash memory. We used theoretical analysis and laser fault injection to better understand the relationships between model characteristics and attack efficiency. Our work has also demonstrated the value of simulation in facilitating the evaluation of AI models. These—and future—strides in the evaluation of AI security will be crucial to certify and design protection mechanisms for embedded AI systems.

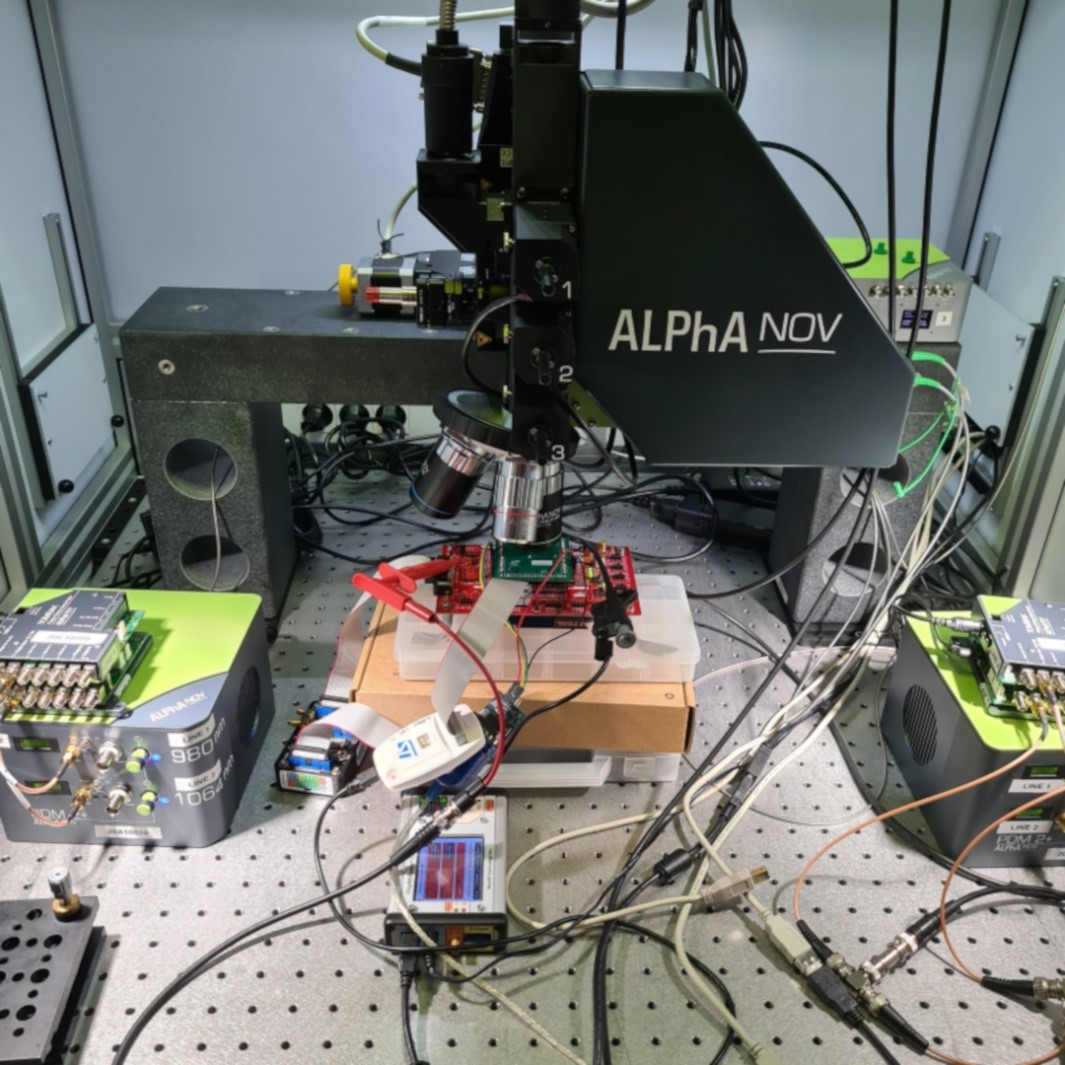

A laser injection platform used for evaluating the robustness of embedded neural networks.